II. What is transparency?

Transparency can be defined in multiple ways. There are a number of neighboring concepts that are sometimes used as synonyms for transparency – including “explainability” (AI research in this area is known as “XAI”), “interpretability”, “understandability”, and “black box”.

Transparency is, roughly, a property of an application. It is about how much it is possible to understand about a system’s inner workings “in theory”. It can also mean the way of providing explanations of algorithmic models and decisions that are comprehensible for the user. This deals with the public perception and understanding of how AI works. Transparency can also be taken as a broader socio-technical and normative ideal of “openness”.

There are many open questions regarding what constitutes transparency or explainability, and what level of transparency is sufficient for different stakeholders. Depending on the specific situation, the precise meaning of “transparency” may vary. It is an open scientific question, whether there are several different kinds, or types, of transparency. Moreover, transparency can refer to different things whether the purpose is to, say, analyze the legal significance of unjust biases or to discuss them in terms of features of machine learning systems.

Transparency as a property of a system

As a property of a system, transparency addresses how a model works or functions internally. Transparency is further divided into “simulatability” (an understanding of the functioning of the model), “decomposability” (understanding of the individual components), and algorithmic transparency (visibility of the algorithms).

Given that many of the most efficient, current deep learning models are black box models (almost by definition), researchers seem to assume it is highly unlikely that we would be able to develop them as fully transparent. Because of this, the discussion focuses on finding the “sufficient level of transparency”. Would it suffice if algorithms offered people a disclosure of how algorithms came to their decision and provide the smallest change “that can be made to obtain a desirable outcome” (Wachter et al., 2018)? For example, if an algorithm refuses someone a social benefit, it should tell the person the reason, and also what he or she can do to reverse the decision.

The explanation should tell, for instance, what the maximum amount of salary to be approved is (input), and how decreasing the amount will impact the decisions made (manipulation of the input). But the problem is that the right to know also applies to situations where the system makes mistakes. Then, it may be necessary to perform an autopsy on the algorithm and identify those factors that caused the system to make mistakes (Rusanen & Ylikoski 2017). This can’t be done by only manipulating the inputs and outputs.

This illustration depicts a very simplified AI model tasked to recognize all cats in data consisting of all kinds of animals. The model has inferred two patterns that make up a cat. To the model, they're just numbers, but to us, they look like describable patterns. However, patterns and features inferred can look quite complicated to us. For more information, see https://distill.pub/2017/feature-visualization/.

Moreover, transparency serves many other functions in contemporary debates on machine learning models. It can be relevant for developing legislation or for ensuring public trust in AI. To handle these issues the notion of transparency in AI is typically given a wider definition in terms of “comprehensibility”.

Transparency as comprehensibility

The comprehensibility – or understandability – of an algorithm requires that one should explain how a decision was made by an AI model in a way that is sufficiently understandable to those affected by the model. One should have a concrete sense of how or why a particular decision has been arrived at based on inputs.

However, it is notoriously difficult to translate algorithmically derived concepts into human-understandable concepts. In some countries, legislators have discussed whether public authorities should publish the algorithms they use in automated decision-making in terms of programming codes. However, most people do not know how to make sense of programming codes. It is thus hard to see how transparency is increased by publishing codes.

* Take seed number from machine time for sample;

data _NULL_;

seedNumber= int(%sysfunc(TIME())) ;

call symput('seedNumber',seedNumber);

run;

%put &seedNumber;

* Order universe ;

proc sort data=universe;

by henro;

run;

* Make a sample, n=2000 ;

proc surveyselect data=universe method=srs

n=2000 seed=&seedNumber

out=sample;

run;

* Order sample ;

proc sort data=sample;

by henro;

run;

* Unite sample to universe, create variable TYPE; data all;

merge universe(in=a)

sample(in=b);

by henro;

length TYPE $ 1.;

if a then TYPE='V'; * referenceGroup ;

if b then TYPE='K'; * experimentGroup ;

* assign values to variable REAOH ;

REAOH='PUOTOS';

run;

* Confirm that all 2000 people have a TYPE that is given a value 'K';

proc freq;

tables type;

run;Would it be more helpful to publish the exact algorithms? In most cases, publishing the exact algorithms does not bring a lot of transparency either, especially if you do not have the access to the data used in a model.

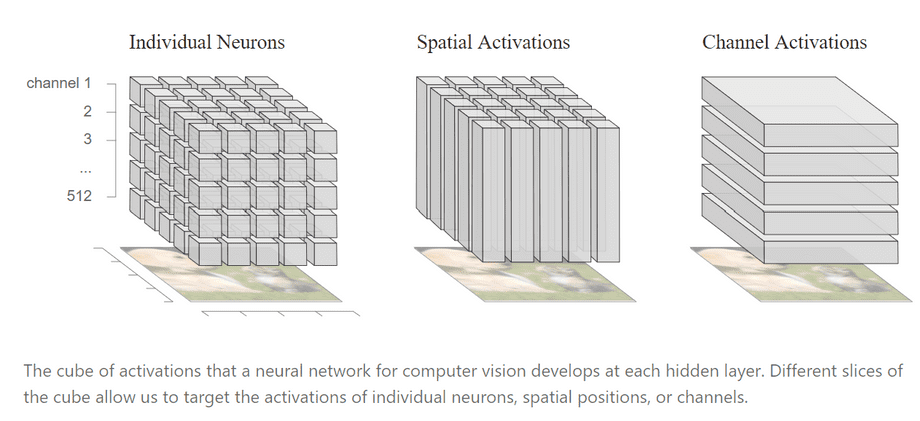

Nowadays, cognitive and computer scientists develop human-interpretable descriptions of how applications behave, and why. Approaches include, for example, the development of data visualization tools, interactive interfaces, verbal explanations or meta-level descriptions of the features of models. These tools can be extremely helpful for making AI applications more accessible. However, there is still plenty of work to be done.

Diagram CC-BY 4.0 Olah, et al., "The Building Blocks of Interpretability", Distill, 2018.

The fact that comprehensibility is based on subject and culture-dependent components complicates this more. For example, the logic of how visualizations are interpreted – or how the inferences are made on them – varies across cultures. Thus, tech developers should pay attention to the sufficient understanding of the visual language they use.

Moreover, much is dependent on the degree of data or algorithmic literacy, for example the knowledge of contemporary technologies. In some cultures, the vocabulary of contemporary technology is more familiar, but in many others they may be completely novel. To increase the understandability, there is clearly a need for significant educational efforts in improving algorithmic literacy – for example on “computational thinking” (Heintz & al 2016). This user literacy will have a direct effect on transparency in terms of the ordinary users’ basic understanding of AI systems. It may actually provide the most efficient and practical way to make the boxes less black for many people.